The Monthly Active Row Has Thorns

MAR is a vestige of legacy batch ETL. Streaming is the engine for Real-Time AI.

Why the "Rose" of Legacy ELT is Pricking Your Budget

The $5 Connection Tax

Starting January 2026, every active connection now costs a minimum of $5/month. Low-volume connectors that were once free now line the bill.

Stale Data Kills AI

Batch ETL feeds your AI 24-hour old context. You cannot build intelligent agents on yesterday's news. Latency is the new downtime.

Deletes are the New Revenue

Delete events now count towards paid MAR. You are literally paying to remove data you've already paid to ingest.

The Death of Bulk Discounts

Pricing has shifted from connector-level isolation. More connectors now equals exponentially higher costs.

Moving to Continuous Streams

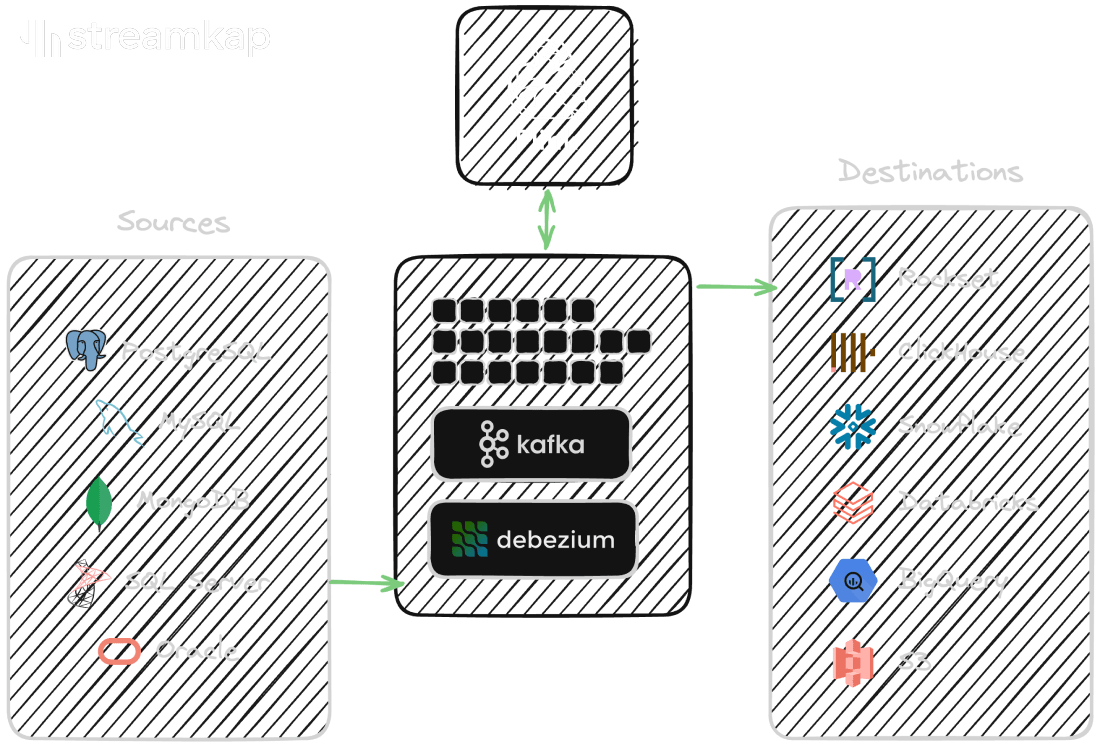

Streamkap utilizes a streaming-first architecture. Instead of micro-batches and files, we hook directly into the database transaction log.

- Sub-second Latency: From log to warehouse in milliseconds. The heartbeat of AI agents.

- Direct Ingestion: Bypass the file stage entirely via Snowpipe Streaming.

- Pricing by Physics: Paid by GB volume, not abstract row counts.

Voice of the Data Engineer

"We are experiencing a huge spike (more than double) in monthly costs following the latest changes... the pricing shoots up dramatically."

r/dataengineering"Our current priority is to dump as much Fivetran as we can as fast as possible. Just moving NetSuite off will cut our bill by 50%."

u/latro87"It makes it really hard to estimate costs before you get the bill. Each connector is priced independently, making cost optimization harder."

r/dataengineeringThe Mathematical Verdict

| Metric | Fivetran (Legacy) | Streamkap (Modern) |

|---|---|---|

| Pricing Model | Monthly Active Rows (MAR) | Data Volume (GB) |

| Latency | 15 min - 1 hour | < 1 Second |

| Snowflake Load Cost | High (Batch COPY INTO) | Low (Snowpipe Streaming) |

| Data Fidelity | Low (Snapshots) | High (Log-based CDC) |

| AI Readiness | Low (Stale Context) | Real-Time (Instant Action) |

| GenAI Use Cases | Historical Reporting | Autonomous Agents |

| Security | SaaS Processing | BYOC (Your VPC) |

AI Agents Need Now, Not Yesterday

🚫 The Legacy Trap

Legacy ETL relies on batches. By the time your AI agent receives the data, the "active row" is already history.

An AI agent acting on 24-hour old data isn't intelligent—it's dangerous.

⚡ The Streaming Reality

Real-time context allows AI to act on intent, not just history. Capture the event the millisecond it happens.

Reduce TCO by 3x

By aligning pricing with physics and architecture with speed, Streamkap offers a lower Total Cost of Ownership while delivering the real-time fuel your AI needs.

Proven at Scale

SpotOn

Reduced data latency to seconds, enabling restaurant reports in under 5 minutes — a 4x improvement over previous SLAs.

Limble

Collapsed 400,000 shards into a single pipeline, cutting maintenance from 24 hours/month to just 2 hours.

Nala Money

Achieved real-time visibility into mid-market FX rates, protecting margins with dynamic pricing adjustments.

Ready to stop counting rows and start streaming value?

De-thorn your stack in 3 steps. Move to the next generation of data movement.

Get Started with Streamkap